ChatGPT enters the room and peoples minds

I managed to test the ChatGPT quite soon after it was released and one of the first tasks was to make me a top 10 most important papers on a topic that I wasnt an expert on (I needed to teach myself to review a conference paper). Few seconds later the system fluently generated a list with short descriptions and I was ready to dig in. The problem was that none, NONE of the papers existed. Time will tell how fact-checking and external databases can be used, but for now this thing cannot be trusted. We have created a monster and the RLHF gave it a mask of human, but we need to be aware of its monstrosity hidden underneath the fluency of generated text.

Suddenly my semantic primes grant topic becomes more important - we need to rethink how we generate the foundation models and intervene in the learning process. Without new ideas beyond tensor arithmetics, we cant open the box, the box cannot explain itself fully, the difficulty for controlling and regulating it will be limited. Transformer architecture rules the field but its usefulness makes people stick to it instead of trying to change the paradigm. Large Language Models might be a way for simulating human perception before developing hardware solutions for more human-like learning algorithms.

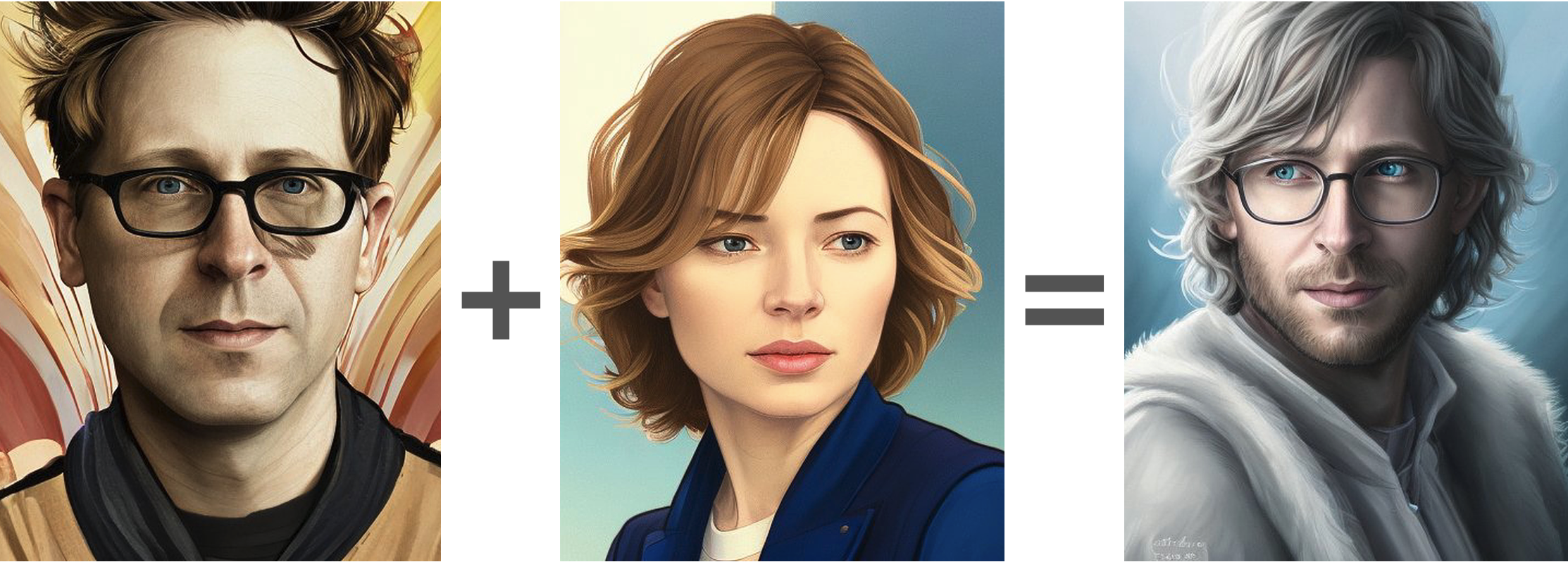

Still, foundation models will be used for gazillion of ways we haven't anticipated. Example below: combining parents' features to predict our sons' future face :)

← Back to Blog List