Toward Information Science That Understands Human Beings and Analyzes Society

Search Engines That Spur Keyword-Transcending Free Association

Miki HASEYAMA, Doctor of Engineering,

Professor of the Graduate School of Information Science and Technology, Hokkaido University,

Division of Media and Network Technologies, Research Group of Information Media Science and Technology

Profile:

Completed master’s degree from the Graduate School of Information Science and Technology, Hokkaido University, in 1988, and PhD from the same school in 1993. After positions including assistant at the Research Institute for Electronic Science, Hokkaido University, assistant professor of the Faculty of Engineering, Hokkaido University, and visiting professor at the University of Washington, she assumed her current position of professor of the Graduate School of Information Science and Technology, Hokkaido University, in 2006. Her fields of expertise are the development of theories of multimedia signal processing, including image, video, and audio signal processing, and their applied research. Professor Haseyama is a member of the Institute of Electrical and Electronics Engineers (IEEE), Institute of Electronics, Information and Communication Engineers (IEICE), Institute of Image Information and Television Engineers (ITE), Acoustical Society of Japan (ASJ), and the Information Processing Society of Japan (ISPJ). She is also a member of the Engineering Academy of Japan (EAJ) and the Science Council of Japan (SCJ). Among other duties, Professor Haseyama has served as an Expert Member of the Information and Communication Council, Ministry of Internal Affairs and Communications, Japan (2007–11), and Board Member/Chief Technical Adviser of the Information Grand Voyage Project, Ministry of Economy, Trade and Industry, Japan (2007–09).

Media and Network Technologies: Advancing into a new phase as an academic discipline

What is happening at the forefront of Media and Network Technologies?

Haseyama: Until now, Media and Network Technologies have been considered technologies for providing information and services in virtual space. However, it is now becoming possible to use digital data to analyze human behaviors and thoughts in the real world, and estimate how they will impact the formation of society. Digital data can now be used as a tool to explore the very subject of human beings. I think this means that information science has entered a new phase as an academic discipline.

What kinds of research are you conducting in your lab?

Haseyama: A variety of websites, like blogs, and services, as represented by social networking services, exist on the Internet. Digital data representing human behaviors and thoughts, such as documents, images, and video, are being stored on the Web. When you draw out similarities and differences in the data by analyzing them from a variety of angles, and extract connections to real-world phenomena, what becomes visible? What do you learn as something becomes visible? What we are tackling is research on developing theories for creating paths to this qualitative understanding by quantifying and visualizing that the vast amount of data that are laid out haphazardly on the Web.

Currently, we are conducting research in five basic themes in the Laboratory of Media Dynamics: (1) encoding, (2) decoding, (3) recognition, (4) semantic understanding, and (5) computer graphics. Furthermore, from technologies in these five basic areas, we have been developing Cyber Space Navigator, a next-generation information access system. This system organically traverses diverse media. Technologies in themes (1)–(3) are useful for arranging conditions suitable for analysis. They are, for example, technologies for restoring degraded images, removing unnecessary objects, and automatically detecting humans and objects. We are developing theme (4) technologies to understand semantic contents, including the semantic contents of images and videos. We are creating computing systems that automatically understand what is included in the target content from features such as colors, patterns, shapes, and movements. Technologies in theme (5) are being developed for generating images and videos. We are pursuing research that makes it possible to automatically create 3D graphical models and produce animation.

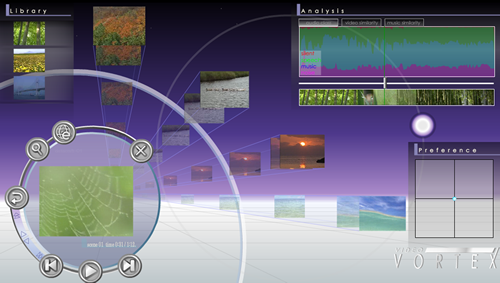

Furthermore, we have been developing Cyber Space Navigator, a next-generation information access system, by applying these technologies. Representative applications of Cyber Space Navigator we have developed are Image Vortex and Video Vortex, interfaces that efficiently search for what the user is looking for from images and videos existing on the Web.

Search engine that can search for images without keywords

What kinds of features do Image Vortex and Video Vortex have?

Haseyama: What is most characteristic about these technologies is that keyword input is not necessary. For example, suppose you’re searching for a photo of Hokkaido’s beautiful scenery. With existing search services, photos are manually tagged with different types of data (like date and place of the photo) and keywords such as “grassland,” “mountains,” and “blue sky.” You then conduct a search by inputting words (keywords) that are close in description to an image you have in your head. However, because human senses are very vague, what you really look for are images described by words like “exhilarating” and “scenery where I can feel the wind.” After all, you don’t have a concrete image in mind in the first place. Image Vortex and Video Vortex are effective in such cases when you cannot conduct a search using explicit queries (keywords-based questions and images).

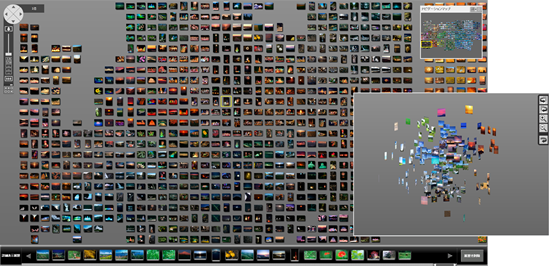

With Image Vortex, features (colors, patterns, contours of objects, positions on the screen) computed from images in a database are converted into numerical values, enabling the technology to automatically recognize the kind of image, including its content, from its feature quantities. Furthermore, by comparing the feature quantities of each image, similar and dissimilar images can be distinguished. The differences between two images can be expressed as a distance (Explanation 1/Figure 1). The user can view groups of images floating in 3D space on the interface, and drill down to the images they are looking for. A service that applies Image Vortex, called Image Cruiser (http://imagecruiser.jp/) is already available.

Video Vortex (Explanation 2/Figure 2) is an interface for videos. It integrates characteristics of a video such as images, sounds, music, and text to search and recommend similar videos. This system can make adjustments on “what is visually similar” and “what is acoustically similar” based on user preferences to bring the user to his or her desired video more efficiently. Also, by incorporating similarities in sound and music, Video Vortex can recommend videos in completely different genres, bringing about new discoveries and realizations that surpass the user’s expectations. We are also planning to offer Video Vortex as a commercial service.

Induce serendipity and encounter what you’re looking for

How will these technologies change our lives?

Haseyama: Searches using keywords are constrained by the user’s skill and values. Image Vortex and Video Vortex automatically present search result candidates found by a search engine in groups of images (or videos). One image or video spurs a cascade of associations, and from time to time you will discover new relationships that you haven’t anticipated. I think this is a groundbreaking system that will promote serendipity.

Currently, the system is centered on visual and aural information, such as images, videos, music, and sounds. However, if other human senses (smell, taste, touch) and emotions can be represented with numerical values, then human awareness and behaviors can be analyzed in further detail.

If such a system can permeate society as an infrastructure, then it will transcend the boundaries of information science and become beneficial to all sorts of fields and industries. I think we can anticipate it becoming a tool for understanding human beings and analyzing society.

Explanation

Explanation 1. Image Cruiser, the Next-Generation Imaging Grouping Technology

Based on the distance scale defined by Image Vortex, Image Cruiser is an interface that achieves high-speed visualization of images. The technology encompasses a vast amount of images, and the user can arrive at the desired image easily and quickly. The current system enables a service that supports 1 million images.

Explanation 2. Video Vortex, the Next-Generation Associative Video and Music Search Engine

Shown in the lower left-hand corner is the video selected by the user. Videos with similar video characteristics are shown on the upper left-hand corner. In the lower right-hand corner are videos with similar music and audio characteristics. None of the videos are tagged. Video Vortex makes automatic recommendations by computing and comparing video and audio feature quantities. As the user selects videos shown in the interface that are close in similarity to their own images, more videos with similar images and music/audio are recommended by Video Vortex. A network of associations proliferates, leading to discoveries unattainable with just keywords.